A mistake often made by Database Administrators, Engineers and Analysts is to assume a bigger warehouse leads to higher costs. In reality, the situation is more nuanced, and often running a larger warehouse can in fact reduce the overall cost of ownership. Additionally, maximizing query performance can help deliver insights faster and free up end-user time.

In this article, we’ll discuss the time-cost paradox of using Snowflake. That you can often get the same work done twice as fast for the same compute cost and therefore save “people time”. Valuable time, that can then be used to do more useful stuff.

“Time is Money” - Benjamin Franklin

The Big Warehouse Advantage

So, here's the scoop. We've all been there, meticulously crafting our queries, only to play the waiting game as they chug along on modestly sized warehouses. But imagine if we could slash those wait times without a nasty surprise in the bill? That's exactly what's happening for those daring enough to scale up their Snowflake warehouses.

It's like suddenly finding an express lane on the freeway that was hidden in plain sight.

To demonstrate the incredible power of moving to a bigger warehouse, I executed a query to benchmark just how fast Snowflake can go.

I executed the SQL below which is deliberately has no WHERE clause and performance acceleration features like Snowflake Data Clustering, Search Optimization Service or even the Snowflake Cache won’t help.

use warehouse benchmark;

insert into store_sales as

select *

from sample_data.tpcds_sf10tcl.store_sales;

alter warehouse benchmark suspend;

The INSERT statement is a monster query, which fetches and inserts around 1.3TB of data or 28 billion rows from the standard Snowflake Sample Data Sets which means you too can repeat the benchmark test yourself.

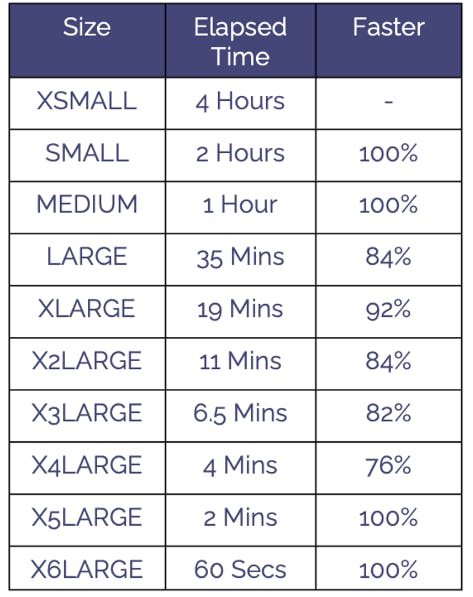

The table below illustrates the incredible performance boost from simply running a bigger virtual warehouse.

Notice as we increased the warehouse size (a simple operation using the SQL statement below), that each time, the exact same query completed twice as fast.

alter warehouse benchmark

set warehouse_size = 'MEDIUM';

To put this in context, before working with Snowflake, I worked for a major Swiss Investment bank in London, and it used to take around four hours to copy a terabyte of data on a completely empty production database server on a Sunday afternoon. However, in that case, the database server was an on-premises fixed size machine and scaling up would have taken weeks (if not months) to complete and involve a multi-million dollar investment. In short, it was simply not an option.

The Speed-Cost Paradox

Now, you might be thinking, "But wait, won't bigger warehouses just ramp up my costs?" That's the paradox! With Snowflake's unique pricing model, where you pay for compute time (down to the second), the math starts to look a lot better. A query that tears through on a larger warehouse can actually cost you the same as it dawdles in a smaller one because you're billed for how long the query runs, not simply how much power it's using.

“It’s perfectly possible to get the same work done, twice as fast for the same cost”

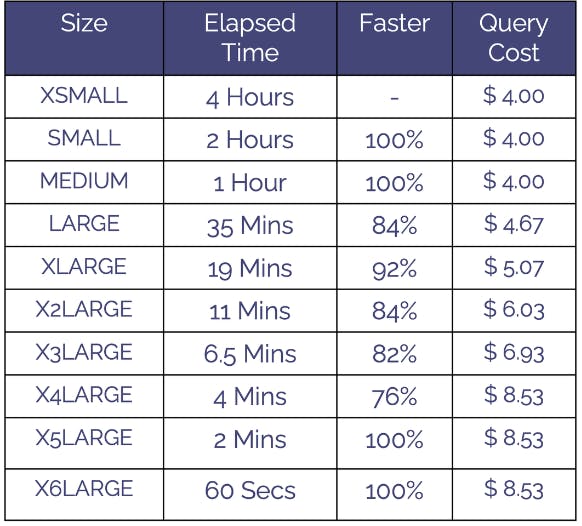

Consider the table below which shows the actual cost of executing the above benchmark query each time on a larger virtual warehouse.

Notice the cost remains about the same each time we increase the warehouse size. In fact, provided the query runs twice as fast, (for example as the query runs four times faster from an X-SMALL to a MEDIUM), the cost remains the same.

This means it’s perfectly possible to get the same work done, twice as fast for the same cost.

Notice however, that as we increase warehouse size to the stratospheric level of and X4-LARGE and above, the cost starts to creep up?

The Law of Diminishing Returns

“You cannot defy the laws of physics” - Albert Einstein. “…But Snowflake bends the rules” - John Ryan

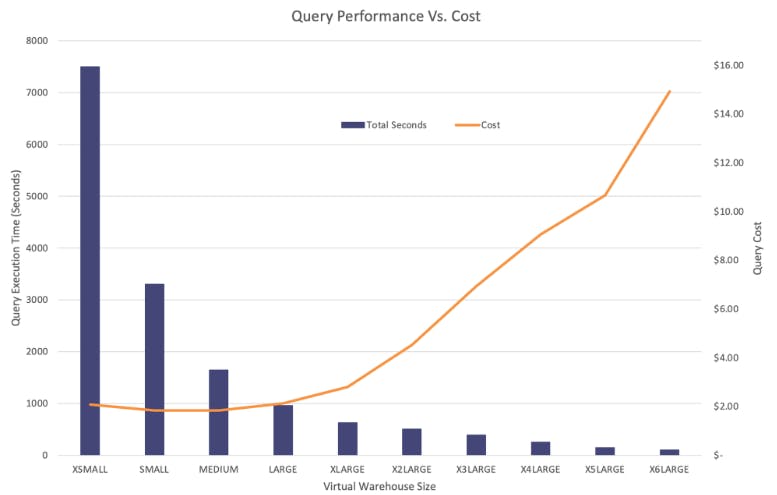

It should be no surprise however, that even Snowflake cannot defy either the Laws of Physics or indeed the Law of Diminishing Returns and the graph below illustrates a subtle point to keep in mind when increasing virtual warehouse size. In this benchmark test, we executed a query which summarized a few million rows scanning gigabytes rather than terabytes of data.

The above graph demonstrates that as you increase virtual warehouse size, the query performance is reduced, in many cases halved each time. However, as the elapsed time is improved by less than 100%, the corresponding query cost increases. This is because the charge rate doubles at each step, along with the potential throughput.

In the case illustrated above, the query cost remains fairly flat from an XSMALL to a LARGE sized warehouse, but then increases steadily with warehouse size following the Law of Diminishing Returns.

“Small/simple queries typically do not need an X-Large (or larger) warehouse because they do not necessarily benefit from the additional resources” - Snowflake Docs.

The reason for the increase in cost was that unlike the benchmark query above that copied a terabyte of data, this query processed a smaller data volume (a few gigabytes), and was therefore unable to use all the compute resources of the larger warehouse sizes.

For example, we can see this in the terabyte size benchmark above when the warehouse was scaled from a MEDIUM to a LARGE warehouse. Although the query performance was improved by 84%, the charge rate doubled, which meant the query cost increased from $4 to $4.67.This cost increase may be perfectly acceptable, it depends upon the business priority of spend ($4 or $4.67) over query elapsed time (60 minutes or 35 minutes).

You can objectively assess the extent to which queries are using all nodes in a cluster using the following SQL statement which returns the average cluster load and the number of queries which use 100% of warehouse nodes.

select warehouse_name

, warehouse_size

, round(avg(case when query_load_percent > 0 then query_load_percent else null end)) as avg_cluster_load

, sum(case when query_load_percent = 100 then 1 else 0 end) as queries_100_pct_load

, count(*) as count_queries

from snowflake.account_usage.query_history

where warehouse_size is not null

and execution_status = 'SUCCESS'

and start_time < dateadd('days',-7,current_timestamp())

group by all

order by warehouse_size, warehouse_name;

To be clear, the above benchmark query performance was improved at each step, but after a LARGE warehouse, the performance gain was less than 100% which therefore led to a slightly increased cost as each warehouse size increase doubles the cost rate per second.

The key point here is the need to be careful when allocating a virtual warehouse size. You should continue to increase warehouse size until the average execution time improvements of running on a larger warehouse consistently drop below 100% over several days. It’s then a simple decision - if faster query performance more important than the increased cost continue to try larger warehouses. Otherwise stop at the optimum warehouse size.

Time Vs. Money

“A cynic is a person who knows the price of everything and the value of nothing” - Oscar Wilde

It’s easy to get caught up in the assumption that a larger warehouse leads to higher costs, however, as we can see above, in many cases we can get the “same work done twice as fast for the same cost”.

So let’s consider the value of time saved.

Every minute you can save by improving query performance is valuable time spend free to discover additional insights. For teams across the board, from data engineers waiting for ETL pipelines to complete, to analysts identifying new insights, this time-saving translates directly into cost savings and efficiency gains.

But to put it in stark terms, let’s consider the example of the dollar value of time. Given the average hourly rate of a freelance Data Scientist (one of the most expensive resources on a project) is around the $80 an hour, that compares well to the cost of an X-Large virtual warehouse at around $48 an hour assuming $3.00 per credit.

Again, to put this in context, an X-Large warehouse provides a cluster of 16 nodes with a total of 128 cores and is capable of copying over a terabyte of data in under 20 minutes. That means a Data Scientist could process up to six terabytes of data on an X-Large before the compute cost exceeded their hourly charge rate.

A Shift in Strategy

Adopting a larger-is-smarter approach might feel like a stretch, but it's more of a strategic step forward. It's about weighing the direct costs of compute resources against the immense value of your team's time.

Here's how to make the leap feel more like a smart, calculated stride:

Benchmark Like a Boss: Before deploying a new project to production, benchmark the 90th percentile execution performance Vs. overall cost on a range of warehouses. Start with a smaller size and scale up until you hit diminishing returns.

Keep an Eye on the Clock (and the Wallet):Monitor Snowflake execution time and cost to ensure you’re maximizing value from the investment.

ConsiderSnowflake’s Advanced Performance Tools: In addition to optimizing Snowflake queries, consider using advanced tuning tools including, Snowflake Data Clustering, Search Optimization Service or Query Acceleration Service which often help improve query performance at little or no additional cost.

Wrapping It Up

So, what's the moral of our story? In the ever-evolving world of data, staying open to new strategies can lead to surprising efficiency gains.

Scaling up Snowflake warehouses could be a game-changer, improving query performance and with faster time to value . It's all about finding that perfect balance, where your queries fly through the data at lightning speed, and your costs stay grounded.

Ready to think big? Your data team will thank you.

DataPilot from Altimate.ai can proactively monitor and provide you with detailed insights on how and where time can be saved. If you, too, want to leverage this feature and save development time without increasing the cost, why not get in touch?

Click on the image below to book a demo.